The prompt for this week was to

Revisit an earlier SLOG posting of yours. See whether you agree or disagree with your earlier self.

I would like to revisit my blog post on recursion (link) because I believe my explanation on that post was incorrect and I clearly only scratched the surface of that topic.

In the post I answered the question “How does recursion work” in quite a confusing manner. So, I would like to gain some redemption points and provide another, hopefully better and more concise, explanation.

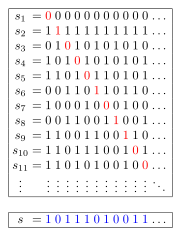

Simply put, recursion is solving a complex problem in terms of the solutions of smaller less complex instances of the same problem over and over again. A key word to remember when thinking about recursion is repetition. Now, with this in mind, I would say that a recursive function is defined by using itself repeatedly and reducing its complexity each time. (moving towards the base case)

Something that I found particularly helpful when I get confused on a concept is to compare it to another concept that I have a better grasp on. I think the best way to understand recursive functions is to compare it with iterative functions.

| Iterative | Recursive |

| Go through whole thing each step | Break down into smaller problems |

| Repeats all code as is | Repeats simpler version of code |

| Stop when end of loop | Stop when base case is reached |

One thing to note is that anything that can be done recursively can be done interatively.

In my old blog post I also talked about the benefits of recursion and for the most part I agree with what I said. It is definitely neater and simpler but this is only if the algorithm “naturally” lends itself to being recursive. I also agree in the fact that it reduces redundancies since we don’t need to duplicate any of our code.

I would like to add that recursion is very beneficial for two main reasons. Firstly, it is a great problem solving exercise since it requires a little more brain power than iterative thinking (at least from what I have encountered so far). Secondly, it develops logical thinking. The act of asking yourself how do I break this problem down into tiny less complicated pieces and how do those tiny pieces fit together to solve a larger problem really forces you to think in different ways.